AG Valentí Software Installation

In order to decrease the redundancy of installations between different users and to establish a common hassle-free basis of availability commonly used software and libraries are installed under the username "ag-valenti".

Software and Utilities

Here, we provide an list of available software and utilities. For their usage, see Activation of Software and Tools.

Please email requests for installation of additional software and libraries, questions or contributions to this website to support or post in on our mattermost board.

| Short description | Version | Availability | |

|---|---|---|---|

| WIEN2k | DFT code | 19.1, 21.1, 23.1, 24.1 | OK |

| FPLO | DFT code | 18.00-57, 21.00-61, 22.00-62, 22.00-64 | OK |

| VASP | DFT code | 5.4.4, 6.2.1, 6.3.0, 6.4.2, 6.5.0 | OK |

| Quantum Espresso | DFT code | 6.4, 6.5, 6.8, 7.0, 7.1 | OK |

| VESTA | Crystal structure viewer | 3.5.2, 3.5.5, 3.5.7 | OK |

| Wannier90 | Wannierise DFT bands | 2.1.0, 3.1.0 | OK |

| Fermisurfer | Plot Fermi surfaces | 2.2.1, 2.4.0 | OK |

| TEMA | Heiseberg coupling calculator | 1.0, 1.1, 2.0 | OK |

| w2dynamics | DMFT code | 1.1.5 | OK |

| TRIQS | DMFT code | 3.1.1, 3.3.x | OK |

Developer Tools and Libraries

Here, we provide an list of available developer tools and libraries. For their usage, see Activation of Software and Tools.

Please email requests for installation of additional software and libraries, questions or contributions to this website to support.

| Version | Availability | |

|---|---|---|

| Intel Compiler | 2021.2.0, 2019.3.199, 2019.0.117 | OK |

| Intel MKL | 2021.2.0, 2019.3.199, 2019.0.117 | OK |

| Intel MPI | 2021.2.0, 2019.3.199, 2019.0.117, 2020.1.217 | OK |

| HPCX MPI | 2.10 | OK |

| Open MPI | 4.0.1, 4.1.1 | OK |

| Open UCX | 1.13.1, 1.8.0 | OK |

| Eigen | 3.3.8 | OK |

| FFTW | 3.3.8, 3.3.10 | OK |

| Armadillo | 10.1.1 | OK |

| Boost | 1.75.0 | OK |

| ALPSCore | 2.3.0-rc.1-1 | OK |

| ALPSCore CT-HYB | 1.0.3 | OK |

| Maxent | 1.1.1 | OK |

| ana_cont | 1.0 | OK |

| julia | 1.6.5 | On all desktop, dfg-xeon, mallorca |

| iTensor for julia | 0.2.13 | On all desktop, dfg-xeon, mallorca |

Getting Started

Prerequisites

To access the installation you have to be in the group ag-valenti, otherwise you will get "permission denied" errors.

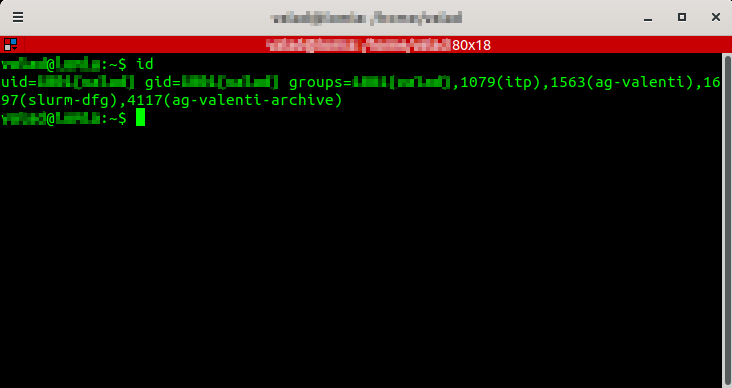

Check this with the terminal command id. The output should look somewhat like this:

For running jobs on the group-only compute clusters (mallorca, barcelona, bilbao, dfg-xeon, fplo) you need to be in the group slurm-dfg.

Please contact the ITP system administrator to be added to these groups.

Activation of Software and Tools

Software and Utilities

There is no single path containing all binaries to avoid confusion and errors. Each user must request the activation of a desired program via a loader script that lies in the user home of ag-valenti. The script should be called like this:

source /home/ag-valenti/activate <option>Loading different programs can lead to problems in the dynamic linking stage. Please try to avoid unnecessary loading of software and do this in your job script or in separate terminal profiles.

For a complete list and description of available options please use the -h or --help flags.

Please do not add this to your .bashrc file as this may have consequences for the stability of other software. Only the default option is considered safe for loading in your .bashrc.

Developer Tools and Libraries

In addition to numerical tools we also host commonly used developer tools and libraries that may be more up-to-date than the system default.

In order to use these type

source /home/ag-valenti/activate_dev <option>

A detailed description of options and available versions of tools or libraries is available using the help flags -h,--help.

For example, to use Eigen do

source /home/ag-valenti/activate_dev eigen

g++ your_code.cpp -o your_executablePartitions

Our group has its own HPC cluster with various partitions. A partition is a collection of nodes (computers). The nodes have different RAM sizes and CPUs with varying numbers of cores.

In the table below, we provide an overview of the currently available ressources. 'Recommended usage' specifies partition properties like optimization and does not exclude software which might be available. A list of available software and developer tools can be found in the sections Software and Utilities and Developer Tools and Libraries.

| Partition | Number of nodes | Name of nodes | Number of cores | Memory [GB] | CPU type | Ubuntu version | Recommended usage |

|---|---|---|---|---|---|---|---|

| dfg-big (retired) | 3 | dfgbig[05,11,14] | 48 | 128 | AMD Opteron(tm) | 20.04 | Everything (no Infiniband) |

| 3 | dfgbig[06,12,13] | 48 | 256 | ||||

| 4 | dfgbig[07-10] | 64 | 256 | ||||

| fplo | 2 | dfg-fplo[1,2] | 12 | 256 | Intel(R) Xeon(R) | 20.04 | FPLO (no Infiniband) |

| 4 | dfg-fplo[3-6] | 16 | 256 | ||||

| dfg-xeon | 6 | dfg-xeon[03-08] | 16 | 128 | Intel(R) Xeon(R) | 20.04 | Everything |

| 7 | dfg-xeon[09-15] | 20 | 128 | ||||

| 1 | dfg-xeon[16] | 24 | 256 | ||||

| barcelona | 8 | barcelona[01-08] | 40 | 192 | Intel(R) Xeon(R) | 20.04 | Everything |

| mallorca | 4 | mallorca[01-04] | 48 | 256 | AMD EPYC | 20.04 | Jobs with runtime ≤ 12 hours |

Versions

The ag-valenti program loader is designed to host different versions for each program. It is intended to maintain old versions and add new versions rather than performing in-place upgrades. Each user has the freedom to choose from the installed versions.

Usually, the newest installed version is loaded automatically. If a specific version is desired an argument has to be specified:

source /home/ag-valenti/activate <name>@<version>a.b or a.b.c. Available versions for a specific program are

listed by -h,--help:

source /home/ag-valenti/activate --helpDocumentations

Some programs come with documentations embedded in the source tree. In this case we provide a symbolic link to the respective directory

under /home/ag-valenti/docs to abstract the installation details from the user. Use ls /home/ag-valenti/docs to

list all available documentations. Note that these are provided "as is". Use cd $SOFTWARE_DOCUMENTATIONS to get there.

Tips and Tricks

Custom terminal profiles

In case one uses e.g. WIEN2k and VASP very often in an interactive terminal session one might be tempted to load

both programs in the default .bashrc file. This can lead to problems if the programs use different versions

of the same dynamic library. In this case it can be helpful to define custom terminal profiles.

-

Define aliases for each program, e.g.

alias loadvasp="source /home/ag-valenti/activate vasp" -

Create links to the terminal that automatically add the option

--rcfile .vaspbashrcFile: vaspterm

#!/bin/bash gnome-terminal --rcfile=.vaspbashrc -

Create separate profiles

- Go to Edit -> Preferences

- Next to Profiles click on + and type a name

- Go to Command and check Run a custom command instead of my shell

-

Add unter Custom command e.g.

source .vaspbashrc - Choose to Hold the terminal open under When command exits.

gnome-terminal --window-with-profile=<profile_name>.An executable can be created like this:

File: abcterm

Don't forget#!/bin/bash gnome-terminal --window-with-profile=<profile_name>chmod u+x abcterm. - Any of the above can be made more convenient by defining a custom keyboard shortcut for your terminal (see below).

Custom keyboard shortcuts

Having keyboard shortcuts for often used tasks is very handy. This is how it is done:

- Open the system settings menu and navigate to: Settings > Devices > Keyboard.

- Scroll to the bottom and click on +

- Enter whatever name you like and a command for that you want to create the shortcut.

- Click on Set Shortcut and press whatever key combination seems convenient to you.

Slurm batch script essentials

Slurm is a workload manager that distributes work over the available resources. A single work entity is called a "job". To obtain an allocation we typically use the batch submission system, where a number of jobs are pushed onto a queue and then executed as resources become available.sbatch

To submit a job use the command

sbatch job.sh

#!/bin/bash

#SBATCH --partition=PARTITION

#SBATCH --ntasks=NTASKS

#SBATCH --time=dd-hh:mm:ss

#SBATCH --job-name=JOBNAME

# your command to be executed

| partition | name of the partition: itp, itp-big, barcelona, dfg-xeon dfg-big, fplo |

|---|---|

| ntasks | Number of tasks. This will allocate ntasks processors unless --cpus-per-task is specified. Sets the varibale $SLURM_NTASKS. |

| cpus-per-task | Request a number of CPU cores per task. The total number of processor cores allocated is then ntasks*cpus-per-task. Useful for shared memory programs, because a single task is guaranteed to run on one node. Groups of processors belonging to the same task will also sit on the same node. |

| time | Time limit for the allocation in the format dd-hh:mm:ss. After this has run out the job will be canceled. |

| job-name | Name of your job |

| mem | Requests a specific amount of memory (in MB). This limit can be temporarily exceeded, but will fail the job eventually. Use 5120 or 5G for 5GB. |

| mail-type | Control which status mails will be sent to your email address. NONE, BEGIN, END, FAIL, ALL are common values. |

| nodelist | Request a specific list of nodes. The job will contain all of these nodes and possibly additional hosts as needed to satisfy resource requirements. The list may be specified as a comma-separated list of hosts, a range of hosts (host[1-5,7,...] for example), or a filename. The node list will be assumed to be a filename if it contains a "/" character. If you specify a minimum node or processor count larger than can be satisfied by the supplied node list, additional resources will be allocated on other nodes as needed. Duplicate node names in the list will be ignored. The order of the node names in the list is not important; the node names will be sorted by Slurm. This is very useful to restrict your calculations on specific nodes, to avoid spaming them over the whole cluster. The node names are identical to the ssh names for the indivdual node. |

| exclude |

Opposite to -w, --nodelist. Explicitly exclude certain hosts from the list. This is very handy if you want to avoid spaming your jobs all over the cluster. The host names are identical to the ssh names for the indivdual host.

|

squeue

Use the commandsqueue to display the current job queue.

A few notable options:

-u,--user |

show only the jobs belonging to the given user |

-p,--partition |

show only the jobs running and queueing on the given partition |

Software Help

WIEN2k

The most recent user guide can be found over at TU Wien. For the one shipping with any installed version visit the documentations archive.Saving, restoring and cleaning a calculation

WIEN2k is notorious for creating large files that are essentially a complete waste of disk space. However, the program comes with tools that alleviate that problem.

Use save_lapw -d <directory> to store all input files and all necessary

files for restoring a calculation to ./<directory>.

For more information use the option -h.

After changing input files or after another calculation the previous run can be restored with

restore_lapw -d <directory> if save_lapw has been

run before.

Once your calculation is completed clean up the directory with clean_lapw or w2k_clean.

This keeps only the important files and deletes the large files. Should those be needed

later on they can be recovered quickly. For this, check which subprogram creates which

file in the user guide.

WIEN2k Utility Suite

Tools that help with common WIEN2k related tasks are collected in this suite. Currently it contains:

| w2k_clean |

Cleans large files (like clean_lapw) but keeps :log file.

|

|---|---|

| w2k_machines_setup |

Generates a valid

.machines file based on the resources requested from Slurm. This works for an arbitrary number of (fully allocated) nodes,

but is limited to k-parallelization (no atom-parallelization via MPI at the moment).

|

| fix_wannier90_hopping | Converts Wannier90 hopping file to a format without degeneracies. |

Example script

A valid job script for parallel jobs looks like this:

#!/bin/bash

#SBATCH --partition=barcelona

#SBATCH --ntasks=40

#SBATCH --time=00-10:00:00

#SBATCH --job-name=my_job_name

. /home/ag-valenti/activate wien

w2k_machines_setup

run_lapw -p -e 0.0001 -c 0.0001

k-parallelization uses process communication via SSH. Be sure to setup an unprotected SSH public-private key pair via ssh-keygen and

add the public key to your ~/.ssh/known_hosts file.

Otherwise you will face "Permission denied" errors.

Unfortunately, due to the way k-point parallelization works, it is necessary to have the activate line in the .bashrc

file if you are using the -p option.

FPLO

FPLO executables ship with the default naming convention <name>a.b-c-x86_64,

where a.b-c is the version number. This allows for the parallel installation of different

versions. Since we use a different version management, which allows to load a specific version only

this tedious naming scheme is unnecessary. Therefore, in addition to the default binaries we provide

convenient symbolic links fplo, fedit, dirac, which can be

used independently of the specific version of FPLO that is used.

A sample job script is provided below:

#!/bin/bash

#SBATCH --partition=dfg-fplo

#SBATCH --ntasks=1

#SBATCH --mem=6G

#SBATCH --time=00-01:00:00

#SBATCH --job-name=my_job_name

. /home/ag-valenti/activate fplo

fplo

Note that the version can be changed in the argument to the activate script.

Since FPLO binaries are statically linked one can safely load FPLO along with any other Program, even other versions of FPLO. In this case the shortened executable names become ill-defined and the longer standard names should be used instead.

Using CIF files as FPLO input files

The FPLO input file =.in is created by the editor fedit. Provided as part

of the default set of programs cif2fplo, originally written by Milan Tomić,

allows to automatically convert a CIF file to FPLO input, which can then be edited with fedit.

Usage is kept rather simple: cif2fplo filename, where filename is

the name or path to an existing CIF file. The =.in file will be created in the current

working directory of the shell.

Cleaning up FPLO files

FPLO files follow a very annoying naming convention. Annoying because they start with = or +

and therefore have to be typed in quotes. We provide a very simple tool to delete all or a selection

of files: clean_fplo. Use -h for a list of available options. This is especially

useful if you've accidentally opened fedit in e.g. your home directory and want to

get rid of the files it created.

Using PyFPLO

FPLO ships with a very powerful and handy Python library pyfplo. When loading FPLO you will also have access to pyfplo. With version 21.00-61, one can finally use PyFPLO with Python3!

VASP

VASP requires specific input files that are distributed along with the software.

You can find the files at /home/ag-valenti/docs/VASP/input_files.

A sample job script is provided below:

#!/bin/bash

#SBATCH --partition=dfg-xeon

#SBATCH --ntasks=16

#SBATCH --mem=100G

#SBATCH --time=00-01:00:00

#SBATCH --job-name=my_job_name

. /home/ag-valenti/activate vasp

mpirun -np 16 vasp_std

Pearson Crystal Data

This is no longer usable in germany... We switched to ICSD. Ask in the group to get the link to the ICSD database.

Shared Folder

There are shared Owncloud folders where different files can be shared (only) with other members of the group. We have the following folders where corresponding files or folders can be stored with the given naming convention:

| Folder | File type | Naming convention |

|---|---|---|

| group_seminar_slides | Slides presented within the group seminar |

YYYY-MM-DD_TITLE_LASTNAME

|

| theses | Any kind of theses (Bachelor, Master, PhD, ...) |

THESISTYPE_FIRSTNAME_LASTNAME

|

| recorded_talks | Any kind of recorded talks, tutorials, ... |

YYYY-MM-DD_TITLE_LASTNAME

|

This way it is easier to keep on top of everything. If you have more than one file to share make a folder, otherwise just upload the file.

Access

To access this folder your ITP account needs to be in the Linux group ag-valenti.

- Open Owncloud

- Login with your ITP account

- You should see a shared folder named

group_seminar_slides

Data Management

This section is still up for discussion! Input is still welcome until these measures are put in place.

Here we provide some guidlines as to how data is supposed to be handled. In the following data refers to published work only.

In order to assure that data is reproducible and available independent of the current staff all information has to be collected in a general repository.

A data set consists of the following elements:

| Details | |

|---|---|

| Source code |

Source code of the program used to produce the data. In case of licensed

software the version number is sufficient. Add a Makefile (or comparable build

instructions) and configuration.txt

file that contains compiler and library versions used if applicable.

|

| Input files | All input files corresponding to the particular calculation. This includes a script to run the program. Add metadata (parameters used). |

| Output files |

Files produced by the program. Only those important for the discussion

in the paper are necessary, e.g. files containing the band structure. If a script

produced the final data file from a program output file add that here. Please try to combine post processing

scripts into one or add an explanatory README file.

|

| Plot scripts | Scripts that generate the exact plot found in the paper using data from a specific data set. Make sure that it is clear which data is used. If raw plots have been post processed add a note containing a short explanation of what was changed. |

| LaTeX source | The complete LaTeX source for the reproduction of the manuscript. This includes the figures. You can add additional notes that did not end up in the paper in a directory separated from the paper. |

For different codes, please provide at least the following files.

| General | README, Source code |

|---|---|

| Input files | Configuration files |

| Output files | Program output (if not too big, at least description in README) |

| WIEN2k | SCF | Band structure | DOS | Others |

|---|---|---|---|---|

| Input files | case.in*, case.struct, case.klist | case.insp, case.klist_band | case.int | self generated input files |

| Output files | case.dayfile, :log, case.scf (if not too big) | case.band*.agr (if not too big) | case.dosev* | respective output file |

| FPLO | SCF | Band structure | DOS | Others |

|---|---|---|---|---|

| Input files | =.in | =.in | =.in | =.in, respective input files |

| Output files | version number, out (if not too big) | +band / +bweights (if not too big) | +*dos* (if not too big) | respective output files |

| VASP | RLX | SCF | Band structure | DOS | Others |

|---|---|---|---|---|---|

| Input files | INCAR, POSCAR, KPOINTS, filenames of POTCAR |

INCAR, POSCAR, KPOINTS, filenames of POTCAR |

INCAR, POSCAR, KPOINTS, filenames of POTCAR |

INCAR, POSCAR, KPOINTS, filenames of POTCAR |

Respective input files |

| Output files | CONTCAR, OUTCAR (if not too big) | Version number, OUTCAR (if not too big) | band data (if not too big) | dos data (if not too big) | Respective output files |

Repository Structure

Example File Names

The name of the repository should have the format arxivID-short-name-of-paper.

Below an exemplary repository structure is provided. Click to expand.

- root

-

2101.1234-short-name-of-the-paper

-

data

- values.dat

-

figure1

- source_code_info.txt

-

input

- script.sh

- parameters.in

-

plot

- fig1.gnu

-

figure2

- ...

-

source_code

-

name-v1.0

- Makefile

- configuration.txt

- ...

-

name-v1.0

-

latex

- ...

-

data

-

2102.1234-short-name-of-the-paper

-

figure1

- source_code_info.txt

- figure2

- band_data

-

figure1

Storage

After structuring the data, the corresponding folder has be stored in

/home/ag-valenti-archive/archive

ask for a permission change so the full group can access the directory this can be done by the IT admin.

Goethe-HLR

On the Goethe-HLR things are a bit different. Currently no DFT codes are set up. Users who run their own codes are asked to follow these rules:

- Only modify files in your user home!

- Keep your

.bashrcas clean as possible and try to avoid modifyingPATH. - Add module loading to your job scripts.

- If you need help installing something the ask in the group for help.

Module Loading

Modules are managed via the Modules utility. You only need the command module. Below we list common usage:

module avail |

Shows all available modules |

module load <modulename> |

Load the specified module into the environment |

module unload <modulename> |

Unload the specified module from the environment |

Per default only global modules will be shown. This may be enough. However, it is recommended that you add the following to your .bashrc

. /home/compmatsc/public/spack/share/spack/setup-env.sh

This will add more group-wide installed modules to your list. Typically you will only need to load the Intel compiler (if preferred) and an MPI

implementation. We recommend mpi/intel/2019.5 with the Intel compiler comp/intel/2019.5 and

mpi/openmpi/3.1.2-gcc-8.2.0 with the GNU compiler. If in doubt feel free to ask.

/home/compmatsc/public. This will affect all users of the group

and you may involuntarily break something. Email support instead.